The “why” and “how” of using simulations as diagnostics for learning

Learning organizations around the globe are feeling the impact of Covid-19. As the pandemic shifted workforces from centralized offices to work-from-home locations, L&D groups reacted by transforming live, instructor-led classroom-based courses to digital content.1 The change was reactionary rather than strategic, though most organizations already had some portion of their curriculum designed as digital, self-paced eLearning or virtual instructor-led courses.

ATD published an article on micro-credentialing recently that emphasized the need to use small bites of training to fill individual and workforce gaps.2 The time commitment to complete learning is small which minimizes the interruptions to work and reduces productivity costs associated with lost time. Badging during the micro-credentialing process also drives completion through competition.

This approach, like many of the courses that were flipped from instructor-led training to eLearning during the early stages of the pandemic, focuses mostly on knowledge transfer. While knowledge gain is essential to learning, it is also important to practice behaviors that will be applied on the job. The process of failing in a safe environment and then consuming more learning helps build long-term retention of behaviors. While micro-credentialing has its benefits especially for time savings, ETU recommends diagnosing skills first. Whereas micro-credentialing saves time by providing short bursts of learning, diagnostic tests allow learners to save time (and organizations to save costs) by avoiding redundant training entirely.

ETU embraces a flipped approach to learning. Rather than developing, then delivering, and then measuring, ETU starts with measurement. Figure 1 shows how ETU focuses on measurement first, then moves to learning and performance.

Figure 1. ETU’s Learning Development Cycle

Why and how does ETU start with measurement?

The why is simple, but it may be better to describe how measurement occurs first. After conducting a strategy session to align behaviors to business objectives, the ETU team determines with clients whether to use a diagnostic tool that tests if learners already have the knowledge, skills and behaviors required to do the job. The test is not a paper and pencil inventory. Nor is it an online survey full of tasks and rating scales. Instead it is an immersive behavioral simulation.

In the simulation, the learner becomes part of a team with a project to complete. The learner makes choices throughout that are classified and scored as optimal, suboptimal and critical. Optimal choices receive the highest scores. Suboptimal and critical choices lead to a failing score and an opportunity to learn more about the topic. Sims easily capture learners’ performance data by following the choices they make.

Getting back to the question of why, the diagnostic is used to customize the learning journey and save time and budget. The diagnostic simulation determines who needs more learning and who does not. Those who pass the diagnostic return to work, avoiding the frustration of having to attend redundant training. For those who do need additional learning, they receive a roadmap of their strengths and areas for improvement so they can have a personalized learning journey. Moreover, the organization benefits because it does not spend resources to train people who don’t need it.

Diagnostic simulations in action

As we examine the benefits of using simulations as diagnostic tools, it helps to take a look at the specific path one ETU client took. A global professional services firm found value in this “measure-first” approach and engaged with ETU to develop three diagnostics related to human-centered design topics: design thinking, agile ways of working, and data-driven storytelling.

Results proved valuable. Across more than 45,000 learners, the diagnostic identified 37% of employees who already had knowledge and skills in these areas.They were invited to learn more but were not required to do so. The remaining employees were instructed to learn from multiple and varied learning assets available through the LMS. Then when they felt confident with the material, they were invited to complete an assessment on each of the three topics.

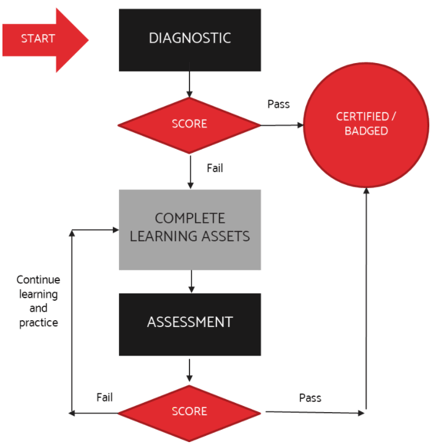

Like the diagnostics, the post-learning assessments immersed the learners in a simulated case study where they had to participate as a team member and demonstrate their skills by responding to the questions in the simulation. Learners who passed the assessment were certified in the topic area and received badges to recognize their achievements. Those who did not pass the assessment were redirected to the learning assets and also given a chance to practice within the simulation. In fact, they could selectively practice in the areas where they needed the most assistance. The diagnostic to learning to certification process is shown in Figure 2.

Figure 2. The Certification Process from Diagnostic to Badging

Because the diagnostic allowed 37% of learners to avoid redundant training, more than 68,000 hours of learning was saved so learners could focus on billable work, and a minimum of $1.2M in cost savings was realized.

Recommendations

Simulations, especially diagnostic simulations, can improve the learner experience and also save an organization substantial cost. However, not every course should use a simulation for diagnostics or learning. Here are three tips for determining if your courses should use simulations as a diagnostic tool or a learning methodology.

- Examine your scrap learning rate: Scrap learning is learning that has been acquired during training but has not been applied on the job. If this rate is high (greater than 30%), then simulations can help by improving the alignment of behaviors to the job and the alignment of training to those behaviors. Aligning a course to behaviors and business needs will reduce scrap learning.

- Determine which employees already have skills: Scrap or wasted learning also comes in the form of redundant training. If learners already know the content and can perform the skills, they do not need to go to training. If the problem that needs to be solved involves behaviors, not just knowledge, consider using a diagnostic simulation to determine who already has the skills associated with training.

- Focus on non-technical soft-skills: Soft skills are often difficult to train because they require time, practice and nuance. Simulations accommodate these requirements while also providing content that can scale across the organization. Courses that focus on soft skills are strong candidates for diagnostics and learning simulations.